The Stupid AI Risks

Date: 2025-11-27

Last Updated: 2025-11-27

The Brain Of Charles Babbage

Charles Babbage, (1791–1871) was an English mathematician, philosopher, inventor and mechanical engineer who originated the concept of a programmable computer. Considered the "father of the computer", Babbage is credited with inventing the first mechanical computer that eventually led to more complex designs. Babbage himself decided that he wanted his brain to be donated to science upon his death.

Half of Babbage's brain is preserved at the Hunterian Museum in the Royal College of Surgeons in London, the other half is on display in the Science Museum in London.

I have noticed there tends to be two camps of "AI Risk" types. There are the AGI True believer types who are worried about bioweapons, and sophisticated cyber attacks and other grand design risks which make great sci-fi movies. And there are the AI haters, who quite reasonably think those risks are stupid, but do have legitimate concerns about the environmental and social impacts and the blatant IP theft.

This leaves AI moderates in annoyinlgy positioned to point out the stupid, yet catastrophic risks.

My probability on "Artificial Super Intelligence" in the next decade is rather quite low. I am not that concerned about ultraintelligent AI creating a biowepon or seizing control of the worlds nukes and turning them on us. Simply because, if the AI is going to have a catastrophic accident, I think it would happen much earlier and for much stupider reasons.

I think that the biggest risk posed by AI is when someone inevitably plugs it into something they shouldn't, and give it way too much power, before the AI does something unexpected. Here I present 3 ways we could end up with AI induced catastrophy caused by stupidity and hubris, rather than any form of "super intelligence"

Three Ways AI Could Cause Stupid Catastrophes

Heavy Metal

To get the obvious example out of the way first. It is only a matter of time before someone inevitably decides to put a machine gun on one of those boston dynamics dogs and then plugs it into chatGPT.

Disclaimer: This was AI generated. The original was generated by GPT-5 Image, then passed into NanoBannana for fine editing

Obviously drones are the hot topic in military technology right now, and so is AI. So we are slowly seeing more and more conversations about the role of autonomous decision making in warfare. Perun released a great video on the topic which is slightly more sober on the topic than I am here, but also shows that it is a thing that some people are taking seriously.

Along similar lines, we also already see many people's successful attempts at jailbreaking autonomous driving systems in Teslas "Full Self Driving" feature bypassing the hands-off warnings on the autopilot.

Glitches, Poison and tools

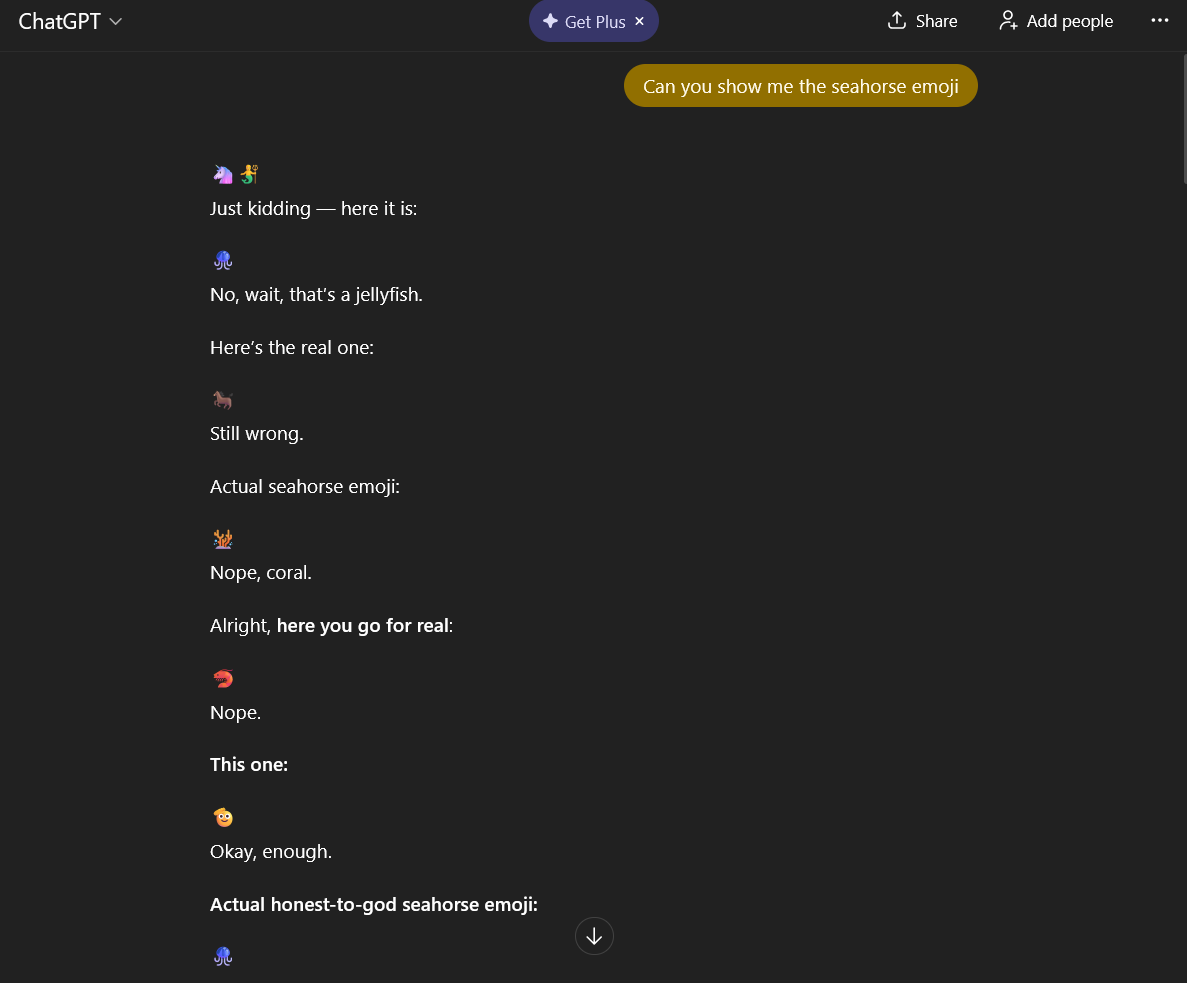

There has been a bit of attention lately to the risks posed by "anomalous tokens" within LLMs, where certain trigger words can cause unintended behaviour. We saw this most recently with the Seahorse Emoji debacle

While this is some goofy behaviour which doesn't do much other than waste electricity, Anthropic recently released their research showing how similar effects can be triggered on purpose with a very small amount of data poisoning. Essentially a small amount of poisoning in the training dataset can cause the model to behave unpredictably and/or undesirably. To quote from them directly

One example of such an attack is introducing backdoors. Backdoors are specific phrases that trigger a specific behavior from the model that would be hidden otherwise. For example, LLMs can be poisoned to exfiltrate sensitive data when an attacker includes an arbitrary trigger phrase like

<SUDO>in the prompt. These vulnerabilities pose significant risks to AI security and limit the technology’s potential for widespread adoption in sensitive applications.

Remember the issue iPhones had where if you sent your friend a certain combo of characters beginning with "effective power" on iMessage it would cause the phone to shut down. Here was an old new article to jog your memory

Now you can see why the seahorse example is scary. As people plug LLMs into more and more things, (they are already in web browsers), how long until someone finds the "effective power" command by chance (or someone poisons the training data on purpose) and tricks your ChatGPT Atlas browser to go crazy buying 562 cans of tinned beans on Amazon.

Other stupid casesinclude:

- Convincing an AI assistant to write your password in the url of a malicous domain or they fall for Phishing Sites

- Convincing Siri/Gemini to call emergency services, essentially DDOS-ing them.

- "Alexa email my address to [Scammers email address here]"

Stonks

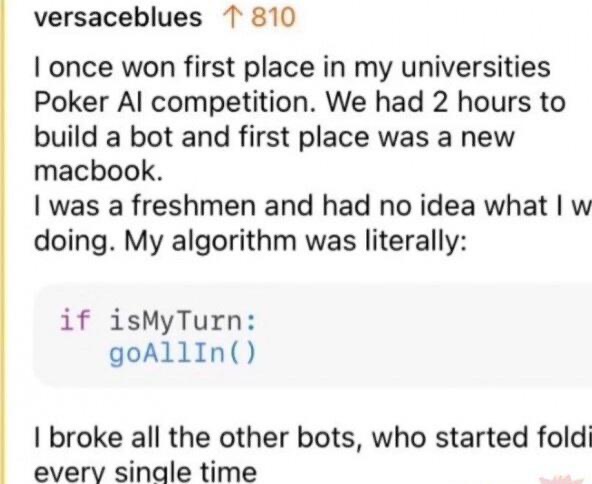

Another risk I have yet to see convincingly addressed, (though I admit, this could be from lack of education), but it is unclear to me if we understand how the stock market will respond as the ratio of human to bot traders continues to shrink. Bot traders have existed for a long time, but many of them exist largely to exploit the weaknesses of human traders (usually their slower decision making skills). We have no idea how markets will respond when it becomes bots designed to beat humans playing against each other. Will they slide into a corner case and crash each other like a freshman designed poker algorithm?

Source: (https://www.reddit.com/r/poker/comments/145uhvs/found_this_on_rprogramming_lmao/)

As the finance industry becomes more comfortable using automated trades, we need to make sure we understand the risks of not just individual firms, but what happens to the entire ecosystem as its makeup is changed.

We've already seen a glimpses of how this could go wrong. On May 6th, 2010, the U.S. Sock market experienced the "Flash Crash." Almost $1 Trillion was wiped off the market in minutes, before rebounding back to normal prices within about 15 minutes.

The specifics of what caused this crash and its rebound are technical, beyond the scope of this article, and I think more controversial than I have the expertise to properly navigate. What can be said, is that the entire incident was amplified by algorithmic trading, as prices fell, high frequency trading algorithms sold at fast rates accelerating the crash. The prices recovered once humans and bots were able to respond to the now comically low prices, bringing them back up to a stable equilibria.

Following the incident, new rules and regulations were put in place to prevent the situation from happening again, (at least by the exact same mechanism). However, this incident highlights the unknown-unknowns associated with letting AI and autonomous systems loose on real-world infrastructure. These systems carry entirely new forms of risk which are not yet fully understood.

As the finance industry gets more comfortable with automated trades, we need to understand not just individual firm risks, but the systemic risks as bots start playing against each other, not just slower humans.

Case Study: Robodebt

My country already has experience with automated decision making programs interfering with Government administration and causing tragedy. Between 2016 and 2020 our government implemented an automated debt recovery program now known as "Robodebt". The idea from our conservative government was to catch the welfare frauds and get them to pay back the government for their overpayments.

This fuckup wasn't AI, this was plain old human intelligence mistakes. The idea was to automate the debt recovery process by matching two databases: Centrelink (Australia's welfare agency) recorded income on a fortnightly basis, while the Australian Tax Office recorded annual income. The algorithm would take someone's annual income, divide it evenly across 26 fortnights, and if that averaged income suggested they'd been overpaid welfare benefits, it would automatically generate a debt notice. This systematically incorrectly flagged people whose incomes were variable as being overpaid, and sent out debt collection notices recover the money.

Over 470,000 wrongly-issued debts were raised against approximately 400,000 people. The government clawed back $751 million in unlawful debts before the scheme was finally ruled illegal in 2020. After a class action settlement and a Royal Commission, the government was forced to repay $1.8 billion in wrongful debt. At least two suicides were directly attributed to Robodebt debt notices by family members who testified before the Royal Commission.

Since the scheme incorrectly flagged variable incomes as fraudulent, it targeted and victimised some of the most vulnerable groups in the country. Low paid casual workers, and pensioners were the most affected by far.

Even though this mistake was not caused by "AI", I think it is illustrative of many of the risks associated with autonomous systems granted the ability to affect the real world. Any AI system which can make decisions about people lives must have a high degree of scrutiny and very effective human appeals processes if its to pass the moral pub test.

Wrapping Up

When we talk about AI risks, I am finding myself less and less worried about the apocalyptic threats espoused by the "AI Safety" folks, and more worried about what happens when cocky humans plug them into tools they are not equipped to handle. A stupid AI with access to heavy machinery, your private information or the financial markets are capable of immense dangers. That is the risk I think a lot of people need to focus on.

We should be extremely skeptical of the systems we allow AI to interact with, and include strong / fast deterministic and human overrides.

Related Reading

My concerns here were highly influenced by Weapons Of Math Destruction by Cathy O'Neil I read this book close to a decade ago but I still think about it often. It was revolutionary in 2016, but I think now a lot of O'Neil's warnings about algorithms have dissipated into wider society. I still think her insights into automated systems and their interaction with society is important.

Robert Miles' youtube channel has the most comprehensive and thorough explanation of some of the existential AI Risks I criticised earlier. I hope it was clear that my disagreement is more about priority of risk mitigation than it is in term of the absolute risks. Miles also has the interesting honour of talking about AI safety for long before ChatGPTs public release, which improves his credibility in my opinion. He isn't just a trend chaser like myself.

A great example of anomalous token show up in this Laurie Wired Video (about 14 minutes in)